There are two primary ways that this type of attack works. Either within a credible tenant that has been compromised or via attacker-owned infrastructure built specifically for these types of attacks.

Credible Tenant Compromise

In the first type of attack, an attacker has to compromise a user that has sufficient permissions to create applications within the cloud tenant. This can either be performed directly, as part of lateral movement stages moving from on-prem infrastructure into the cloud, or via compromising multiple users and escalating privileges where possible.

Once the attacker sets up the application, often using a legitimate-sounding name which is covered later in this blog, the threat actor then has to add users to the application to have the specified permissions take effect. This is often done either through Teams with a link or via sending emails from the compromised account.

If a threat actor has sufficient permissions to create OAuth applications in this manner, they may also have permissions to modify the requirement for admin consent. While not directly tied to the same permissions, gaining access to a high-privileged role may provide the necessary path to completing this objective.

Another, albeit less frequent attack within a compromised credible tenant is to modify an existing OAuth application. This is significantly less common than the technique discussed previously, however the steps are largely the same.

Attacker-Owned Infrastructure

With attacker-owned infrastructure, a threat actor purposefully builds a cloud tenant with the sole purpose to host malware and compromise users.

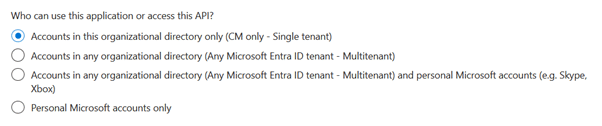

When creating malicious OAuth applications, an option appears to allow for other organizations to interact with the malicious application:

The second option is often chosen for this purpose.

The threat actor then sends a link to users, often via phishing emails, to consent to this application, with the rest of the attack flow following the first technique mentioned above.

The benefit of this latter technique is that it does not first require compromising a user with sufficient permissions to create/modify an application within the tenant. The downside is that it can be more difficult to compromise users, especially those with hardened tenants and user awareness training, and it is also easier to detect than modifying an existing application, provided the right logs are being collected.

Malicious OAuth applications matching compromised usernames, and other suspicious naming conventions

In an attempt to remain undetected in compromised environments, threat actors have been utilizing several naming scheme patterns:

- The malicious OAuth application is named after the originally compromised user.

- The application contains the word “Test”.

- Use of non-alphanumeric characters as the application name.

After a Threat Actor has compromised a user account with sufficient permissions, they will often create a new OAuth2 application within the EntraID tenant that matches the username of the account that they compromised. This is done in an attempt to evade detection by blending in with a legitimate sounding name.

This technique has been observed firsthand via several IR incidents at CyberMaxx, and by the team at ProofPoint with various MACT campaigns, totalling 28% of all applications with MACT campaign 1445. (https://www.proofpoint.com/uk/blog/cloud-security/revisiting-mact-malicious-applications-credible-cloud-tenants).

Another technique that has been observed in-the-wild is naming the malicious OAuth application “test”, “testapp”, or other similar variants. A short, non-descriptive name in another attempt to not raise suspicion.

The final technique that we have seen is using non-alphanumeric characters for the name of the application, most commonly “…”, while several others exist as well.

All of the above techniques have been observed in-the-wild, with the first being used primarily in compromised tenants and the latter two being used as part of multi-tenant compromise campaigns, often through phishing consent attacks.

OAuth2 Backdoored Accounts

When a user authenticates to EntraID, an entry is made in the Sign-In logs that contain details such as a unique ID for that event, the username and display name, the application used, the time, error codes (0 if successful), and many other fields. If a user successfully logs in then there are no error codes associated with the event, which is a good indication of successful login.

Using Microsoft Graph API, we can programmatically retrieve these logs without the need to log in to the Azure portal and manually retrieve them. This is accomplished by querying the https://graph.microsoft.com/v1.0/auditLogs/signIns endpoint, alternatively you can use the beta version of this: https://graph.microsoft.com/beta/auditLogs/signIns.

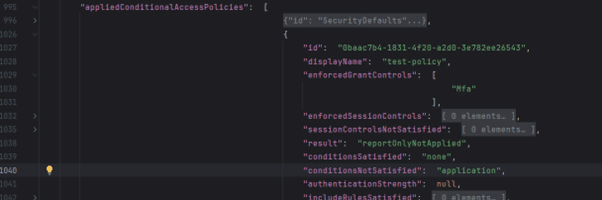

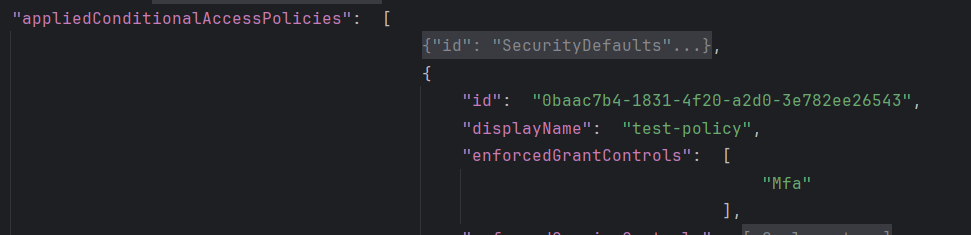

The benefit of using the alternate beta version of this log is that there are some additional fields that can be correlated together. Drilling down into this raw event log; there is a field called “appliedConditionalAccessPolicies”. Within this field is a list of all conditional access policies that are applied to that specific user for that specific logon event. By iterating through each of these CAP IDs and looking for the “enforcedGrantControls” field, it is possible to determine if a) MFA is being applied for this user and b) what specific CAP is responsible for the

MFA enforcement. The field looks like the below:

There are multiple other fields contained within each policy, such as the display name from the Azure portal and the “result” field which determines if the policy is being enforced or just reported on (enforced MFA policies require disabling the SecurityDefaults policy).

Going back to the root of the Sign-in log, there are several other items that are relevant here. First, the “resourceDisplayName” will show what resource was used for this logon event – in the case of a GraphAPI request, it will show as “Microsoft Graph”. However, this display name can be spoofed so making detections based on this is not a good indicator of usage. Directly below the “resourceDisplayName” is the “resourceId”, which will always show as “00000003-0000-0000-c000-000000000000” when using a PowerShell application.

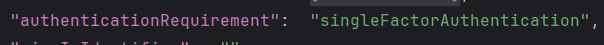

The third and final field to take note of is the “authenticationRequirement”. This field for OAuth2 applications will show as “singleFactorAuthentication”, as the MFA request will need to have already been approved for the application to have completed the consent workflow.

Combining the above fields, we now have excellent indication if an account has previously consented to an OAuth application, which is now actively connected to it.

CloudSweep makes a GraphAPI request to the Audit Logs beta API to retrieve sign-in events and review captured logons for all users, looking for these matching entries. Log-on events that match these attributes will be flagged and it is recommended that they are reviewed to determine if the events are known. If they are not, this may be an indication that a threat actor has successfully gained access to a user account through a consent attack.

As a reminder, OAuth2 applications, once given consent to connect to an account, do not require MFA and will persist through password resets – making them an excellent persistence choice for threat actors.

Recommendations for attack surface reduction, and how to prevent these types of attacks

Summary Checklist:

- Enable MFA, this will help with logging purposes

- Enforce conditional access policies, this will help set a baseline for IR

- Require administrative approval to consent to OAuth2 applications

There are a number of things we can do to defend and harden our tenant against this type of attack. First, enable MFA on all user accounts in enterprise environments. This will also help with detection strategies as you will have a history of where the user normally accepts the MFA push from and when the malicious request was accepted. The exception here is if you’re working IT in a K12 environment and where having kids perform MFA requests isn’t really an option.

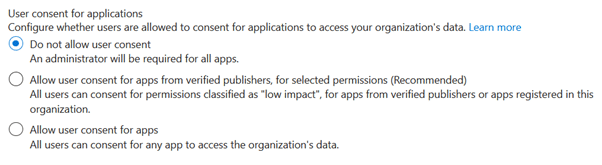

Next, require administrators to grant approval for users consenting to applications. This can be done under EntraID > Enterprise Applications > Consent and Permissions:

If you want to reduce the workload of your IT team, you can disable this via EntraID > Enterprise Applications > User Settings, and stop users from being able to ask administrators for approval. If you do this, consider allowing users to consent to applications from verified publishers for selected permissions.

- It is important to note that some malicious applications can come from “verified” publishers. Verification requires a developer has an MPN ID (Microsoft Partner Network) account and has completed the verification process. However, if an attacker compromises a tenant and launches a multi-tenant compromise campaign, although rare – this may slip through.

Detection

If a user has MFA enabled, under the sign-in logs “Authentication Requirement” you will see “Single-Factor Authentication”. This is a clear sign of token-based authentication and potentially a backdoored account.

Alternatively, you can use the CyberMaxx tool CloudSweep to detect malicious OAuth usage in your environment, which is regularly updated to reflect the latest attack techniques. Find it here: https://github.com/theresafewconors/cloudsweep